Lecture 17: Page Tables, Address Translation

🎥 Lecture video (Brown ID required)

💻 Lecture code

❓ Post-Lecture Quiz (due 11:59pm, Wednesday, April 2).

Virtual Memory (recap)

The basic idea behind virtual memory is to create, for each user-space process, the illusion that it runs alone on the computer and has access to the computer's full memory. In other words, we seek to give different processes different views of the actual memory.

Recall that the (physical) memory in DemoOS is roughly laid out as follows:

0x0

+--------------------------------------------------------------------+

null page ->|R |

+--------------------------------------------------------------------+

0x40000 -->|KKKKKKKKKKKKKKKKKKKKKKKKKKKKKK K| <-- kernel stack

(kernel mem)+--------------------------------------------------------------------+

| RRRRRRRRRRRRRRRRRRRRRCRRRRRRRRRR| console @ 0xB8000

+--------------------------------------------------------------------+

|RRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRRR|

+--------------------------------------------------------------------+

0x100000 -->|Code|Data|Heap ... Alice's process memory ... Stack| <-- 0x13ffff

+--------------------------------------------------------------------+

0x140000 -->|Code|Data|Heap ... Eve's process memory ... Stack| <-- 0x17ffff

+--------------------------------------------------------------------+

| |

+--------------------------------------------------------------------+

| |

+--------------------------------------------------------------------+

0x1fffffff (MEMSIZE_PHYSICAL - 1)

What we'd like to achieve through virtual memory is that Alice's user-space process has the following view of this memory:

0x0

+--------------------------------------------------------------------+

null page ->| XXX NO ACCESS XXX |

+--------------------------------------------------------------------+

0x40000 -->| XXX NO ACCESS in userspace XXX | <-- kernel stack

(kernel mem)+--------------------------------------------------------------------+

| XXX NO ACCESS XXX C | console @ 0xB8000 (can access)

+--------------------------------------------------------------------+

| XXX NO ACCESS XXX |

+--------------------------------------------------------------------+

0x100000 -->|Code|Data|Heap ... Alice's process memory ... Stack| <-- 0x13ffff

+--------------------------------------------------------------------+

0x140000 -->| XXX NO ACCESS (Eve's memory) XXX | <-- 0x17ffff

+--------------------------------------------------------------------+

| |

+--------------------------------------------------------------------+

| |

+--------------------------------------------------------------------+

0x1fffffff (MEMSIZE_PHYSICAL - 1)

... while Eve's user-space process should see this view:

0x0

+--------------------------------------------------------------------+

null page ->| XXX NO ACCESS XXX |

+--------------------------------------------------------------------+

0x40000 -->| XXX NO ACCESS in userspace XXX | <-- kernel stack

(kernel mem)+--------------------------------------------------------------------+

| XXX NO ACCESS XXX C | console @ 0xB8000 (can access)

+--------------------------------------------------------------------+

| XXX NO ACCESS XXX |

+--------------------------------------------------------------------+

0x100000 -->| XXX NO ACCESS (Alice's memory) XXX | <-- 0x13ffff

+--------------------------------------------------------------------+

0x140000 -->|Code|Data|Heap ... Eve's process memory ... Stack| <-- 0x17ffff

+--------------------------------------------------------------------+

| |

+--------------------------------------------------------------------+

| |

+--------------------------------------------------------------------+

0x1fffffff (MEMSIZE_PHYSICAL - 1)

The memory labeled XXX NO ACCESS XXX here should behave as if it didn't exist: in particular,

any access to a memory page in these regions should cause an exception (this is called a "page fault")

that transfers control into the kernel.

Note that neither Alice nor Eve need to know here that they're not looking at the real picture of memory, but rather at a specific fiction created for their process. From the perspective of each process, it may as well be running on a computer with the physical memory laid out as shown in these views. (This is the power of virtualization: the notion of faking out an equivalent abstraction over some hardware without changing the interface.)

To achieve this, we need a layer of indirection between the addresses user-space processes use and the physical memory addresses that correspond to actually locations in RAM. We achieve this indirection by mapping virtual pages to physical pages through page tables.

Page Tables: Intro

Page tables are what let us actually convert a virtual address to physical address. Each user-space process gets its own page table, which it uses to perform that conversion.

Making page tables work efficiently requires some non-trivial data structure design. We'll work towards the actual design real computers use by considering a set of "strawman" designs that don't quite work.

Strawman 1: direct, byte-level lookup table.

Let's first consider what a very simple page table that maps virtual address to physical addresses might look

like. Each entry in the page table would store a virtual address (8 bytes) and a physical address that it maps to

(another 8 bytes), or NONE if there is no physical address for this virtual address and access should cause a

fault into the kernel. Since memory on our computers is divided into individually-addressable bytes (recall the

post-office boxes from lecture 1!), we'd need an entry in the table for each byte. (On a 64-bit computer, that's

264 entries in theory; but real 64-bit machines only support up to 248 actual addresses.)

For example, Alice's page table might look as follows (assuming we're running on a computer with a full 64-bit address space with 48 usable address bits):

+----------------------+-----------------------+ | Virtual address (8B) | Physical address (8B) | +----------------------+-----------------------+ --- | 0x0 | NONE | | | ... | ... | | | 0x100000 | 0x100000 | | | 0x100001 | 0x100001 | | | ... | ... | | 2^48 entries | 0x140000 | NONE | | | 0x140001 | NONE | | | ... | ... | | | 0xFFFFFFFFFFFF | NONE | | +----------------------+-----------------------+ ---

There is a rather obvious problem with this plan: if we needed 8 + 8 = 16 bytes of address translation for every byte of memory on the computer, and if we'd store a table with 248 entries, storing that table would use sixteen times as much memory as the computer actually has!

Strawman 2: store only per-page entries.

Perhaps we can improve on this, and since memory is divided into pages, we might as well only store entries for

each 4096-byte page. Specifically, we can use the lower 12 bits of the virtual address as an offset into

the page (since we're only storing page-granularity mappings in the page table).

In other words, we slice the 64-bit virtual address as follows:

63 47 11 0

+---------+-----------------------+-----------+

| UNUSED | | offset |

+---------+-----------------------+-----------+

|- in page table, w/ bottom bits 0 -|

This means that instead of 248 entries, we would store "only" 236 entries (a page is 4096 = 212 bytes, so 248 / 212 = 236 entries).

Question: Why is the offset 12 bits?

Answer: We define the size of a page to be 4096 = 212 bytes. We want to be able to index into any byte of a destination physical page. So, we need 12 bits to represent every possible offset.

With this scheme, Alice's page table would look as follows:

+----------------------+-----------------------+ | Virtual address (8B) | Physical address (8B) | +----------------------+-----------------------+ --- | 0x0 | NONE | | | ... | ... | | | 0x100000 | 0x100000 | | | 0x101000 | 0x101000 | | | ... | ... | | 2^36 entries | 0x140000 | NONE | | | 0x141000 | NONE | | | ... | ... | | | 0xFFFFFFFFFFFF | NONE | | +----------------------+-----------------------+ ---

Each entry still uses 16 (= 24) bytes of memory, so that's a total of 240 bytes for the page table. Unfortunately for us, that's still 1,024 GB, way more memory than our computers have!

Strawman 3: store only physical addresses.

Perhaps we can avoid storing the virtual address entirely, and thus save 8 bytes. To eachieve this, we can turn

the virtual address into an index into the table.

In other words, we slice the 64-bit virtual address as before and use the upper 36 bits as the index into the table:

63 47 11 0 +---------+-----------------------+-----------+ | UNUSED | index (upper 36 bits) | offset | +---------+-----------------------+-----------+

The table would now look like this:

+-----------------------+

| Physical address (8B) |

+-----------------------+ ---

| NONE | |

index from addr | ... | |

(36 bits) | 0x100000 | |

------------------> | 0x101000 | |

| ... | | 2^36 entries

| NONE | |

| NONE | |

| ... | |

| NONE | |

+-----------------------+ ---

When we use the index to find the corresponding entry in the page table page, we get a physical page address. As before, the offset from the virtual address tells us the offset into the physical page. But this scheme still uses 512 GB (8 bytes times 236 entries) of memory for the page table.

One observation to make here is that ideally we would store nothing for virtual address that map to

NONE. A sparse data structure might help us achieve this! We could use something like a

linked list (which would allow us to skip large, empty parts of the address space), but the O(N) worst-case

access complexity would make such a plan slow. We need something that supports fast lookups, but still uses

little space.

x86-64 Page Tables and Address Translation

The actual page table structure used in x86-64 computers combines the tricks from the strawman designs 2 and 3 above, and adds a clever tree structure into the mix.

We can think of a multi-level page table structure (x86-64 uses four levels; x86-32 used two) as a tree. Multiple levels reduce space needed because when we look up the physical page for a given virtual address, we may visit a branch of the tree that tells us there's actually no valid physical page for us to access. In that case, we just stop searching. So, multiple levels means we can have a sparse tree.

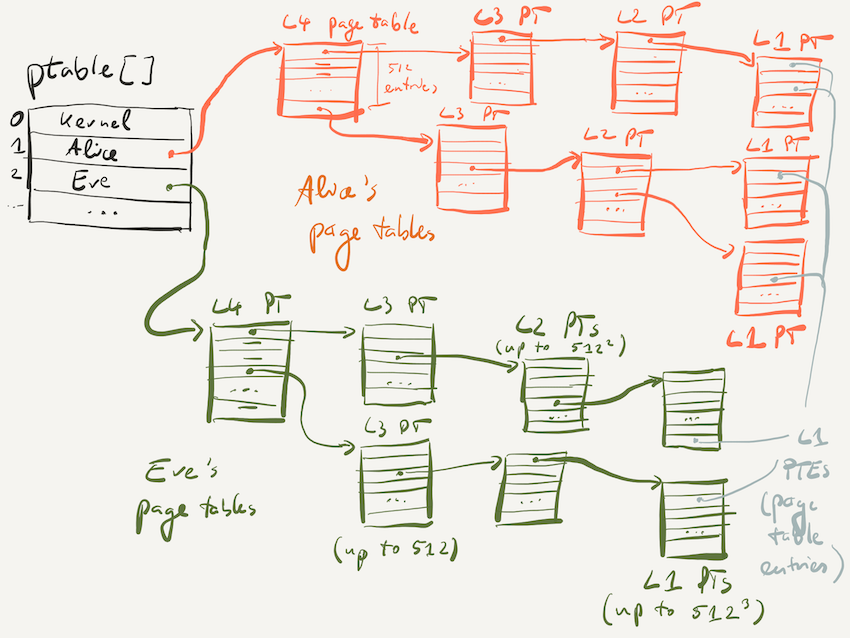

It's important to note that every process has its own page table. In WeensyOS, the kernel code contains a global

array ptable whose slots contain the addresses of the top-level (L4) page table for each process. This way,

the kernel can locate and modify the page tables for every process.

Each L4 page table contains 512 8-byte entries that can either either be empty or contain the address of an L3 page table. The page has 512 entries because dividing the page size of 4096 bytes by 8 yields 512.

The picture below shows an example of how the page tables for Alice's and Eve's process might be structured. The L4, L3, and L2 page tables each contain entries that are either empty or contain the address of the page containing a lower-level page table.

Why does the kernel have its own page table?

Modern computer architectures, including the x84-64 architecture, accelerate virtual address translation via page tables in hardware. As a consequence, it's actually impossible to turn virtual memory off and work directly with physical addresses. Consequently, even the kernel needs to use page tables to translate virtual addresses! It may use an identity mapping to make virtual addresses of key resources (such as the console, or special I/O memory) identical to their physical addresses, but it does need to go through the translation.

OS kernels, like Linux's and the WeensyOS kernel, can actually operate with different active page tables. For example, when a process makes a system call, the kernel executes in process context, meaning that the active page tables are those of the calling user-space process. But in other contexts, such as when handling an interrupt or at bootup, the kernel is not running on behalf of a userspace process and uses the kernel (process 0) page tables.

This structure means that there is 1 L4 page table, up to 512 L3 page tables (each of 512 L4 PT entries can point to a single L3 PT page), up to 5122 L2 page tables, and up to 5123 L1 page tables. In practice, there are far fewer, as the picture shows. Using all 5123 L1 page tables would allow addressing 5123 × 512 = 5124 ≈ 68 billion pages. 68 billion pages of 4096 bytes each would cover 256 TB of addressable memory; the page tables themselves would be 512 GB in size. Most programs only need a fraction of this space, and by leaving page table entries empty at high levels (e.g., L3 or L4), entire subtrees of the page table structure don't need to exist.

The L1 page table entries are special, as they supply the actual information to translate a physical address into a virtual one. Instead of storing another page table's address, the L1 page table entries (PTEs) contain both part of the physical address (PA) that a given virtual address (VA) translates into, and also the permission bits that control the access rights on the page (PTE_P, PTE_W, and PTE_U; as well as other bits we don't cover in detail in this course).

The access permission bits are stored in the lowest 12 bits of the PTE, since those bits aren't needed as part of the physical address. Recall that the lowest 12 bits address a byte within the page, and that we use the offset (lowest 12 bits) from the virtual address directly; therefore, the lowest 12 bits of the page's physical address are always zero, making them available for metadata storage. (The top bit, i.e., bit 63, is also used for metadata: it represents the "execute disable" or NX/XD bit, which marks data pages as non-executable.)

Virtual Address Translation

The x86-64 architecture uses four levels of page table. This is reflected in the structure of a virtual address:

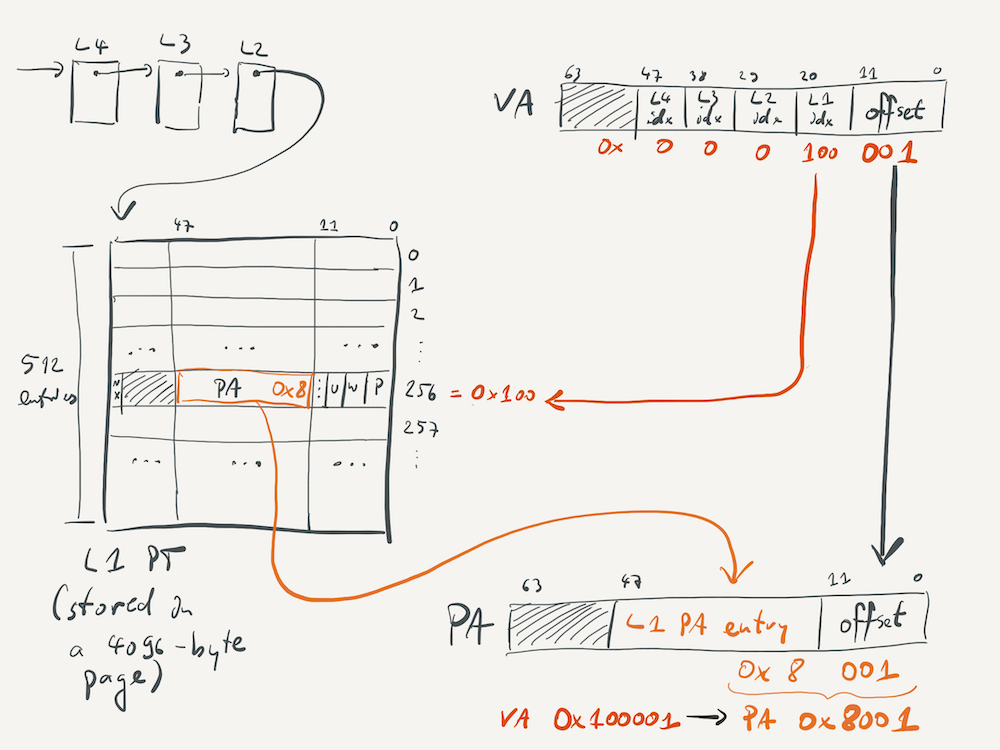

63 47 38 29 20 11 0

+---------+------+------+------+------+-----------+

| UNUSED | L4 | L3 | L2 | L1 | offset |

+---------+------+------+------+------+-----------+

|9 bits|9 bits|9 bits|9 bits| 12 bits

In x86-64 virtual address has 64 bits, but only the first 48 bits are meaningful. We have 9 bits to index into each page table level, and 12 bits for the offset. (This means that the top 16 bits are leftover and unused.)

Each page table "chunk" at each layer has 512 entries. Why 512? Because the chunk of the page table itself needs to fit into a page, which is 212 = 4096 bytes large. Each entry is an 8-byte address, so we can fit 29 = 512 entries into a page.

This is also why the indexes in the virtual address are each 9 bits long! We need 9 bits to choose one out of the 512 entries in each page table chunk.

Each L4 page table entry that is present holds the address of a L3 page table, and each L3 page table entry that is present holds the address of an L2 page table. Each L2 page table entry in turn holds the address of an L1 page table. Entries in the L1 page table actually hold physical addresses (as they are the bottom of the tree) and also store the access bits in the lower 12 bits, where the page address is always all zeroes.

There is only one L4 page table per process, which forms the top of the tree. But there are up to 512 L3 page tables per process, up to 5122 L2 page tables, and up to 5123 L1 page tables per process. In reality, there will be far fewer than this, since no process will actually be using the full virtual address space. Instead, there will be many large gaps in the virtual address space, and there will be no lower-level page tables for these address ranges.

Example

The picture below zooms in on the L1 page table used in translating VA 0x10'0001 to PA 0x8001. Note that the indexes into the L4, L3, and L2 page tables are all zero, since the upper bits of the VA are all zero. (The full 48-bit VA is 0x0000'0010'0001.) The offset bits (lowest 12 bits) correspond to hexadecimal value 0x001, and they get copied straight into the VA. The next nine bits (bit 12 to 21) are, in binary, 0b1'0000'0000 (hex: 0x100, decimal 256). They serve as the index into the L1 page table, where the 256th entry contains the value 0x8 (0x0'0000'0008 as full 36-bit value) in bits 12 to 47. This value gets copied into bits 12 to 47 of the PA, and combined with the offset of 0x001 results in the full PA of 0x0000'0000'8001.

Page tables are the fundamental abstraction for virtual memory on modern computers. While you don't need to remember the exact details of the x86-64 page table structure, you should understand why the structure is designed this way, and how it works – for example, you might get asked to design a page table structure for another architecture in the quiz!

Page Table Lookups (x86-64)

A successful lookup (finding a physical address from a virtual address) goes as follows in the case of x86-64 page tables:

- Use the address in the

%cr3register to find the L4 page table address - Use the L4 index from the virtual address to get the L3 page table address

- Use the L3 page table address and the L3 index to get a L2 page table address

- Use the L2 page table address and the L2 index to get a L1 page table address

- Use the L1 page table address and the L1 index to get the destination physical page

- Use the destination physical page and the offset to get actual physical address within that destination physical page

Finally, one important detail of virtual address translation is that user-space processes don't need to switch into

the kernel to translate a VA to a PA. If every memory access from user-space required a protected control transfer into

the kernel, it would be horrendously slow! Instead, even though the process page tables are not writable or accessible from

userspace, the CPU itself can access them when operating in user-space. This may sound strange, but it works because the

CPU stores the physical address of the L4 page table in a special register, %cr3 on x86-64. This

register is privileged and user-space code cannot change its value (trying to do so traps into the kernel). When dereferencing

a virtual address, the CPU looks at the L4 page table at the address stored in %cr3 and then follows the

translation chain directly (i.e., using logic built into the chip, rather than assembly instructions). This makes address

translation much faster – however, it turns out that even this isn't fast enough, and the CPU has a special

cache for address translations. This is called the Translation Lookaside Buffer (TLB), and it stores the physical

addresses for the most recently translated virtual addresses.

Summary

Today, we further understood the details of page tables, which are mapping tables that help translating virtual addresses (which user-space programs work with) to physical addresses (which refer to real memory addresses in the computer's DRAM chips). Page tables in the x86-64 architecture follow an ingenious four-level design that allows for chunking into page-sized units (so page tables can themselves be stored in physical memory pages) and for a high-level cutoff that avoids wasting space storing translations for virtual addresses that map to nothing (i.e., which have no corresponding physical page).

In WeensyOS, you will interact with page tables through the vmiter and ptiter

helpers, so you won't need to deal with the full complexity of x86-64 page tables. But, under the hood, that

structure is exactly what these helpers work with. vmiter cycles through virtual addresses and

lets you look up their physical address translations, and ptiter cycles through mappings installed

in the page tables, allowing you to efficiently jump over large gaps in the virtual address space.